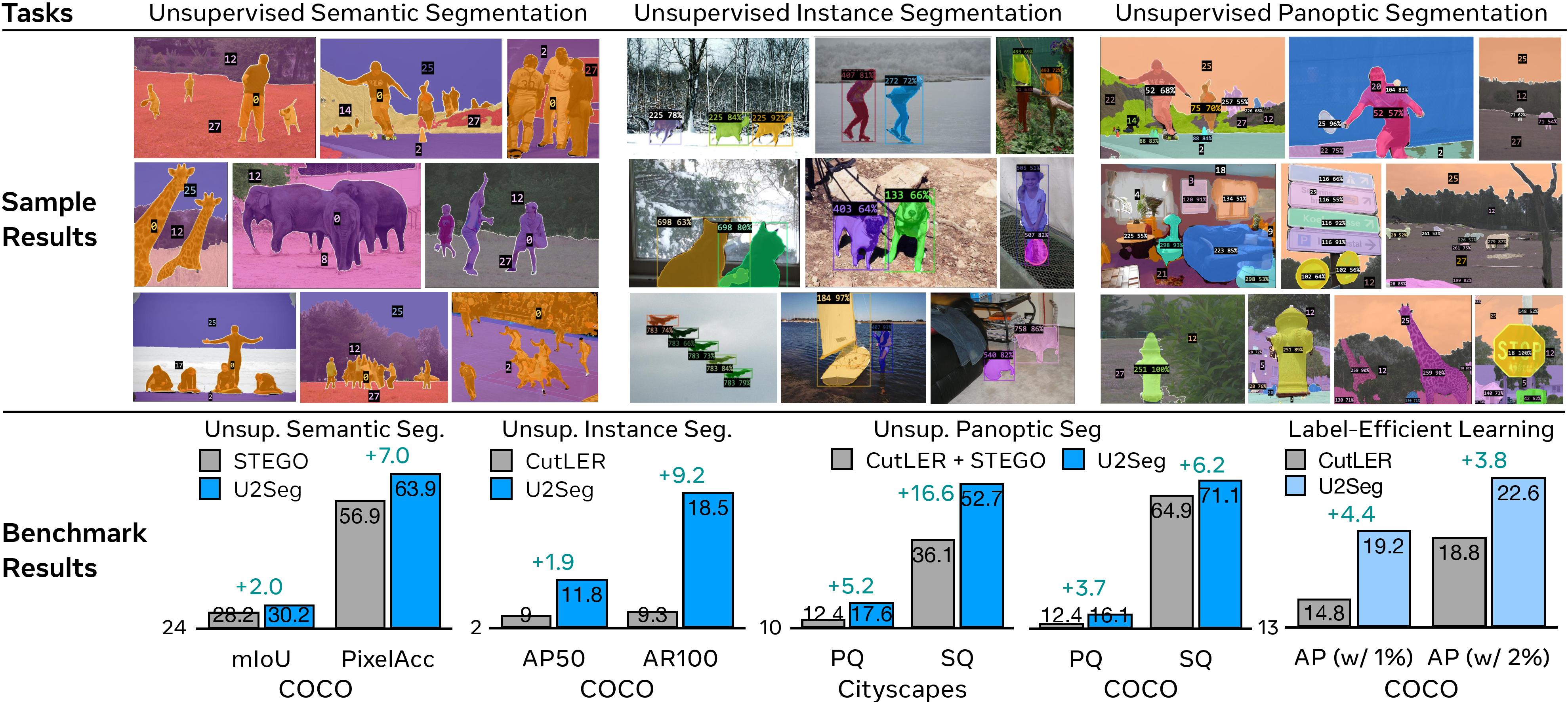

Several unsupervised image segmentation approaches have been proposed which eliminate the need for dense manually-annotated segmentation masks; current models separately handle either semantic segmentation (e.g., STEGO) or class-agnostic instance segmentation (e.g., CutLER), but not both (i.e., panoptic segmentation).

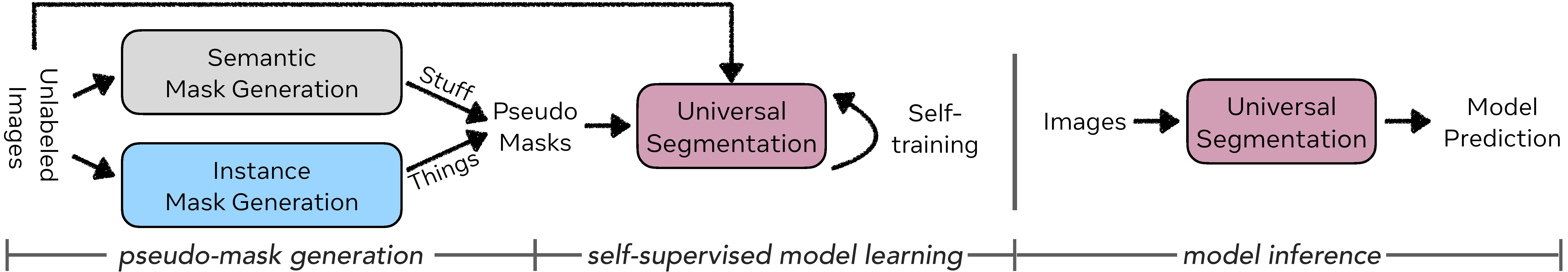

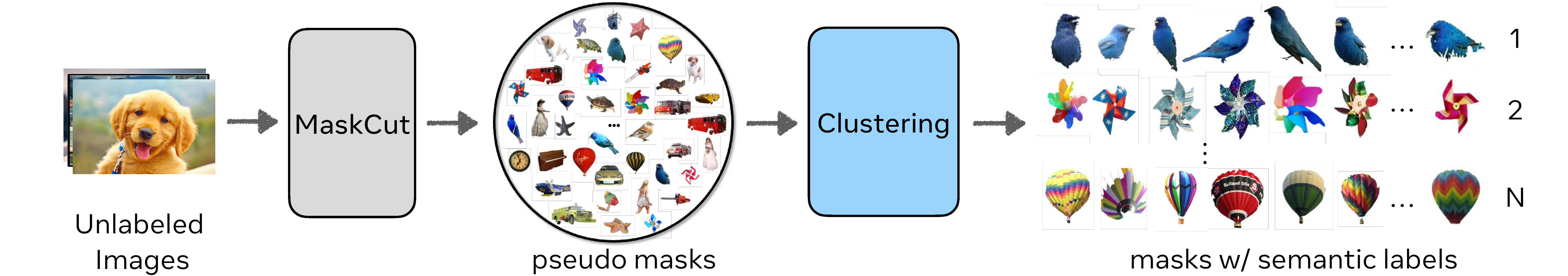

We propose an Unsupervised Universal Segmentation model (U2Seg) adept at performing various image segmentation tasks---instance, semantic and panoptic---using a novel unified framework. U2Seg generates pseudo semantic labels for these segmentation tasks via leveraging self-supervised models followed by clustering; each cluster represents different semantic and/or instance membership of pixels.

We then self-train the model on these pseudo semantic labels, yielding substantial performance gains over specialized methods tailored to each task:

a +2.6 APbox boost (vs. CutLER) in unsupervised instance segmentation on COCO and a +7.0 PixelAcc increase (vs. STEGO) in unsupervised semantic segmentation on COCOStuff. Moreover, our method sets up a new baseline for unsupervised panoptic segmentation, which has not been previously explored. U2Seg is also a strong pretrained model for few-shot segmentation, surpassing CutLER by +5.0 APmask when trained on a low-data regime, e.g., only 1% COCO labels.

We hope our simple yet effective method can inspire more research on unsupervised universal image segmentation.